Organic website users can generate as much as 40% of your total revenue.

Over 75% of all organic clicks go to the top three Google search results. However, achieving that goal and getting all of that coveted organic traffic can be difficult.

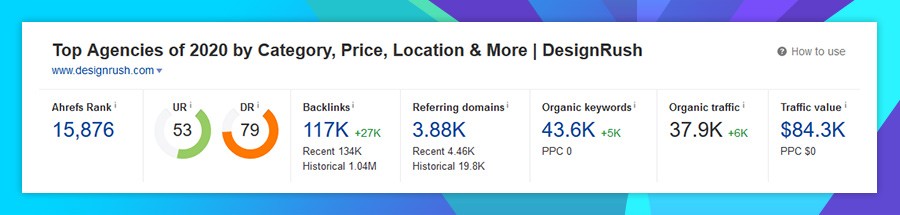

This was the case with DesignRush. Their robust website was not generating as much organic traffic as we believed it could.

DesignRush needed to eliminate all the technical SEO issues that were negatively impacting their rankings.

In this post, we will tell you how we managed to not only achieve that but also how we managed to increase their monthly organic users by a whopping 681%.

Work with our SEO experts. Request a quote

What Is DesignRush?

Our long-term client, DesignRush, is a rating platform and B2B marketplace connecting brands with digital agencies.

Their website features over 7,500 agencies in various industries, such as branding, web design, eCommerce development and more.

Via DesignRush, businesses can find a digital agency to partner with depending on their budget, location and requirements.

Assessing DesignRush’s Search Engine Optimization

DesignRush produces quality content and generates a healthy volume of links, so we turned to technical SEO to identify the main issues that plagued the website.

1. Domain Authority

The concept of domain or website authority was first developed by Moz. It is a metric that indicates a domain’s chances of appearing on a search engine results page.

Domain authority is usually measured on a scale from 0 to 100. The higher the score, the higher the possibility that the website will appear among the top search engine results.

According to Neil Patel and companies that provide similar solutions, such as Ahrefs, the main factor behind the domain authority score is inbound links.

The logic behind it is rather simple: the more high-authority websites link to your domain, the higher your domain ranking will be.

As for the exact measurements in Moz’s terms, a domain authority score of 60+ is considered high.

DesignRush’s overall domain authority and relevance suffered from a high percentage of unrelated anchors.

Unrelated anchors are clickable text that is irrelevant to the content it links to. In DesignRush’s case, most of these anchors pointed to the Resources-type pages that offered little value to the website visitors and negatively impacted the rankings.

Even though DesignRush removed the Resources pages and the redirects, Google was still seeing the connection with the domains as it was unable to index those pages.

2. Duplicate Content

As the name suggests, duplicate content is content that is identical or similar across multiple pages.

While duplicate content is not explicitly harmful, it can still negatively affect a website’s search engine ranking.

Duplicate content can be intentional and unintentional:

- Intentional duplicate content is usually created by website owners in an attempt to improve their search engine ranking. However, this tactic is hardly effective, as it makes the website harder for both users and search engine bots to navigate. This results in poor user experience and poor search engine rankings. Examples of intentional duplicate content include unoriginal guest blog posts or doorway pages that only exist to rank for a specific keyword.

- Unintentional duplicate content is more common and, in most cases, benign. It includes things such as print-only versions of pages or URLs with analytics parameters. While this content is typically non-malicious, it can still cause ranking issues if not treated properly.

Apart from simply deleting the duplicated content, there are a few common ways of resolving duplicate content issues, including:

- 301 redirect: The most common approach to duplicate content is to set up a 301 redirect from the duplicate page to the original content page.

- Canonicalization: Canonicalizing a URL that has duplicates means designating it as original and “canonical.” This means that the search engine bots will only crawl that exact page and ignore the duplicates.

- “Noindex” tag: Adding the “noindex” tag to duplicated web pages will allow the search engine bots to access the pages without adding them to the search engine’s database. As such, users will not be able to access these pages via search engine results.

In DesignRush’s case, we discovered that 3,000+ auto-generated location-type category pages had thin or duplicate content.

The “noindex” tag that was supposed to prevent Google from indexing these pages was not effective, because Google didn’t crawl the pages following the addition of the tag.

All of the pages under the regular and the “index.php” DesignRush domains were also indexed, resulting in yet another duplicate issue. The lack of canonical tags further heightened the problem.

Speak with our experts. Set Up A Consultation

3. Technical Onsite Issues

In addition to the major problems mentioned above, we also discovered a few minor technical onsite issues on DesignRush’s website.

While these issues typically have a low SEO impact, fixing them was instrumental to having a technically clean website.

These minor technical issues were:

- Missing relevant Alt attributes: Adding relevant alternative text to images makes the web content more accessible for visually impaired users. Alt attributes that include the target keywords can also have a positive effect on SEO.

- Broken internal 301 redirects: Even though DesignRush implemented some 301 redirects for duplicate content, not all of them were functioning correctly.

- 4XX errors: These errors have to do with the website not being able to deliver upon the user’s request because the user’s request itself contains a mistake. Some of the more common 4XX errors include 400 (Bad Request), 403 (Forbidden) and 404 (Not Found).

- Duplicate metadata: In addition to duplicate pieces of content, DesignRush’s website also featured duplicate metadata on some of their webpages.

Increasing DesignRush’s Domain Authority

After auditing DesignRush’s ranking issues, we came up with three solutions to help improve the ranking and the authority of their website.

1. Indexing

The deleted pages negatively affected DesignRush’s domain authority and relevance. Even though these pages did not exist on the website anymore, Google still stored them in its database. To resolve this issue we had to ensure that Google indexed the entire domain properly.

Indexing is the second and, arguably, the most important step of the search engine algorithm where the search engine bots crawl, organize and store the webpages.

To improve the domain authority as well as the rankings, Digital Silk created a new Google Search Console account to submit and verify all domains to Google, so the search engine would update their status.

To resolve the issue of indexed duplicate pages under two different domains, we implemented a redirect from all “index.php” domains to their counterpart under the standard domain.

2. Content Cleanup

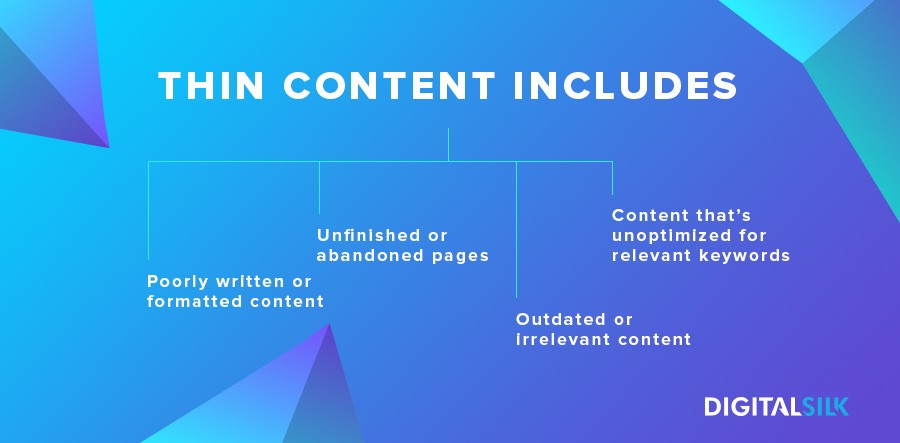

Just like duplicate content, content that adds little or no value to the website can also negatively affect the website’s Google ranking.

Such content includes:

- Poorly written or formatted blog posts

- Unfinished or abandoned pages (also known as “stubs”)

- Outdated content

- Content that’s optimized for irrelevant keywords

We undertook a major content cleanup and deleted thousands of duplicate and thin content pages.

Afterward, we uploaded a new sitemap that contained all of the deleted pages to Google.

This sitemap allowed the search engine to verify the pages as deleted. We then removed the sitemap which served its purpose.

We also excluded the dynamic pages causing duplicate issues, generated by the Sorting function from Google Search.

3. Canonicalization

Over 11 million websites rely on canonicalization to avoid duplicate content issues.

This is, by far, the most effective approach to duplicate content, as it does not necessarily require removing the duplicate content.

And while it does not prevent the user experience issues that arise from duplicate content, it mitigates that content’s effect on the search engine performance of the website.

We set up the correct canonical tags and fixed the duplicate content issue caused by pagination to ensure that Google displays the website’s correct URLs in its result pages.

Upgrading DesignRush’s Content Strategy

Upgrading DesignRush’s content strategy was the next step in our improvement plan. We achieved that by turning to the solutions below.

1. Internal Link Anchoring

Internal links on a webpage are links that lead to other pages of the website.

The goal of internal linking is to make a visitor stay on the website for as long as possible and see as much of the website’s content as possible.

For internal links to be visible and compelling to users, they should have sufficient anchors.

A link anchor is a clickable word or a phrase that takes the user to the linked page.

Some of the best practices for internal link anchoring include:

- Making the anchors as long as possible to improve visibility

- Anchoring internal links to keywords

- Ensuring that the anchor text is unique and relevant to the linked page

In order to address the high amount of unrelated anchors that were affecting domain relevancy, our Digital Silk team improved the internal anchor texts with more exact matches and more intuitive anchors.

2. Content Audit

51% of marketers believe that updating existing content is one of the most effective content marketing strategies.

DesignRush featured plenty of valuable, well-written content on their website. However, not all of it was optimized for relevant keywords and some of the content was outdated.

We reoptimized content on all of the main pages by auditing target keywords and repurposing content where necessary.

Our team also improved the SEO content on pages using our internal SEO audit checklists.

3. External Linking

As the opposite of an internal link, an external link leads to other domains.

However, unlike internal links, external linking can have a significant effect on the SEO performance of a website.

In a nutshell, if a webpage receives a lot of inbound external links (a lot of third-party websites link to it), the search engine ranking of the website is likely to improve.

The more often a page is linked to, the more authoritative and trustworthy it will be considered by Google.

However, it is up to the website manager to decide whether Google should consider an external link to another website as a sign of that website’s authority and, subsequently, improve that website’s ranking.

The most common tool for resolving this issue is the “nofollow” attribute. If an external link is marked as “nofollow,” it will not have any SEO effect on the linked website.

When analyzing DesignRush’s external linking structure, we found that all outbound external links were “nofollow.”

While this is a safe approach, it is not always beneficial to either the linking website or the linked website.

That’s why we implemented a mix of “nofollow” and “dofollow” outgoing links on DesignRush’s article pages in place of having all outgoing links as “nofollow,” as was the case before.

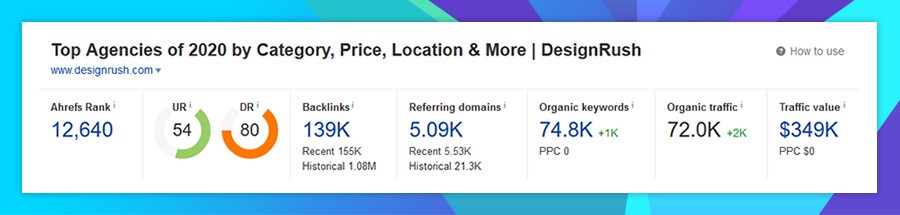

Our Results & Takeaways

After six months of following our SEO strategy for DesignRush, we achieved:

- 681% increase in monthly organic users

- 328% improvement in goal completions

- +4,433 top 10 positions

- +39,875 top 100 positions

The DesignRush case does not only prove the great SEO impact of domain authority.

It also shows how trivial factors, such as incorrect 301 redirects, can negatively affect domain authority and the overall ranking of a website.

Furthermore, it showcases the great power of content and demonstrates why content should always be unique, relevant and well-optimized.

"*" indicates required fields